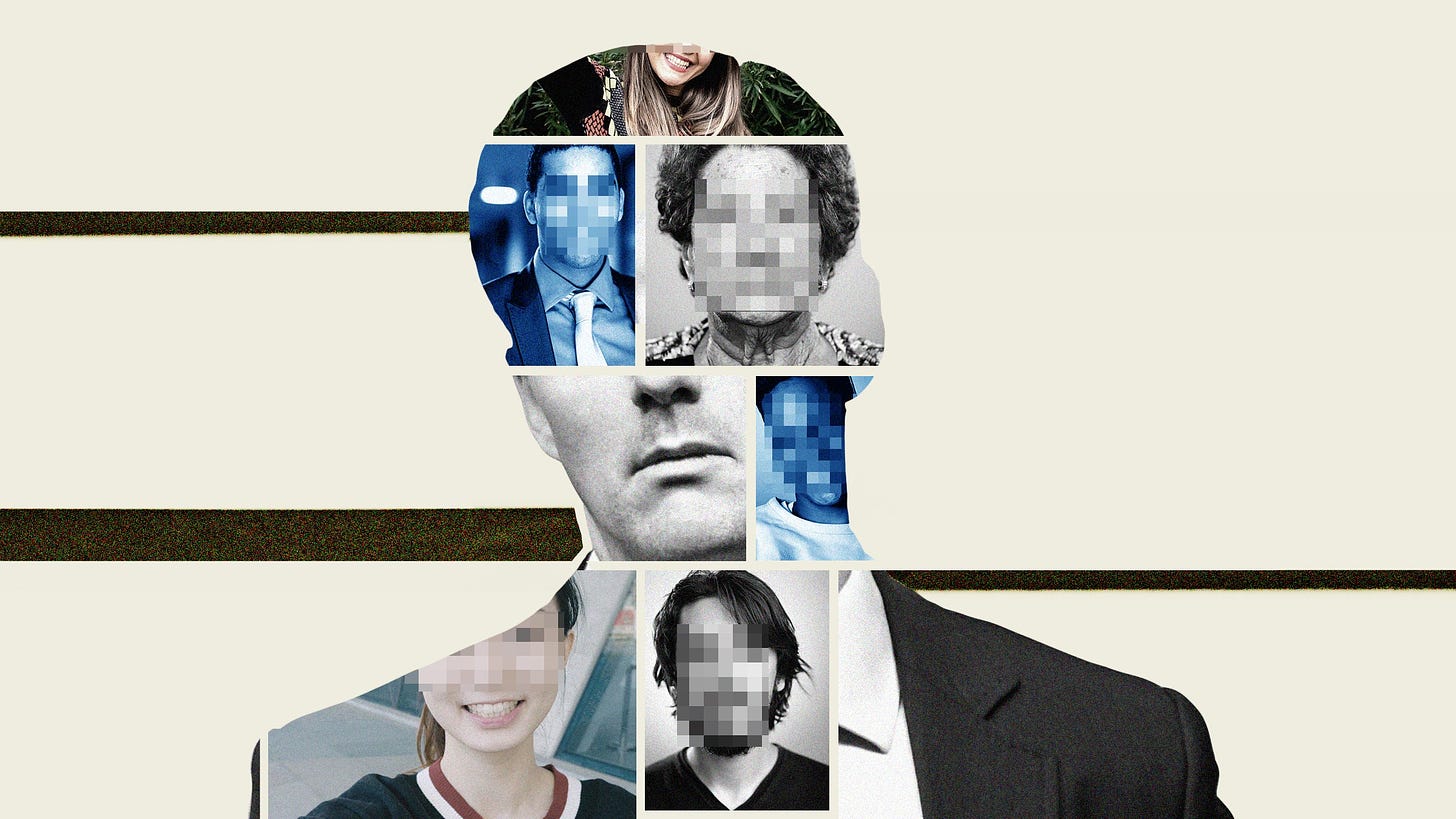

Clearview AI is an American facial recognition company, providing software to companies, law enforcement, universities, and individuals. They scrapped most of our faces from the internet, to train their facial recognition system and database.

Facebook, LinkedIn, Twitter and YouTube objected, but it still occurred.

Now it appears Clearview AI is closer t…

Keep reading with a 7-day free trial

Subscribe to AI Supremacy to keep reading this post and get 7 days of free access to the full post archives.