Hey Guys,

This is AiSupremacy Premium,

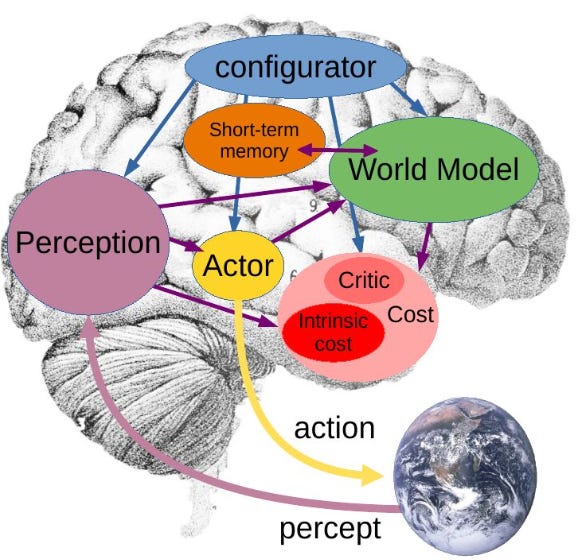

So yesterday I wrote an off-the-cuff post that was not well received re the work and legacy of Yann LeCun. Imagine the timing, when the following day he announces a Major paper that distills much of his thinking of the last 5 or 10 years about promising directions in AI.

When it comes to A.I. at the intersection of news, society, technology and business, I’m opportunist, so without further adieu let’s get into it!

Keep reading with a 7-day free trial

Subscribe to AI Supremacy to keep reading this post and get 7 days of free access to the full post archives.